My social media feeds have been inundated lately with bold assertions and proclamations about the future of product management.

- Do we still need product managers?

- Is AI going to replace product teams?

- Has product…died?

The claims tend to follow a predictable pattern:

- AI writes user stories and PRDs.

- AI generates user personas.

- AI summarizes feedback and explores pain points.

- AI prioritizes roadmaps.

It makes for a compelling headline, often pushed by companies or consultants selling tools or services that claim to automate these tasks. These headlines grab attention, spark debate, and tap into the anxiety many product managers feel as AI reshapes their role.

But this isn’t a funeral. It’s a reckoning. The old, process-heavy, adaptability-light version of product won’t survive. But that’s not the end of product. It’s the beginning of something better. Beneath the clickbait is a valid call to evolve: product management isn’t dying, it’s transforming.

What Product Really Is

Before we talk about what’s changing, let’s be clear about what product is.

Product management isn’t a set of tasks. It’s a discipline of focus, alignment, and judgment.

It’s about understanding problems deeply, prioritizing effectively, and creating the conditions for great teams to build the right things.

AI can assist with this work, but it can’t own it. And if you think product is just a list of tasks?

You’re already doing it wrong.

Why People Want Product Dead

Product is often seen as a bottleneck. It’s seen as the layer that slows down builders with meetings, documents, and decisions that feel like bureaucracy. In fast-moving, engineering-led organizations, product often looks like something that should be automated rather than a discipline rooted in insight, prioritization, and alignment.

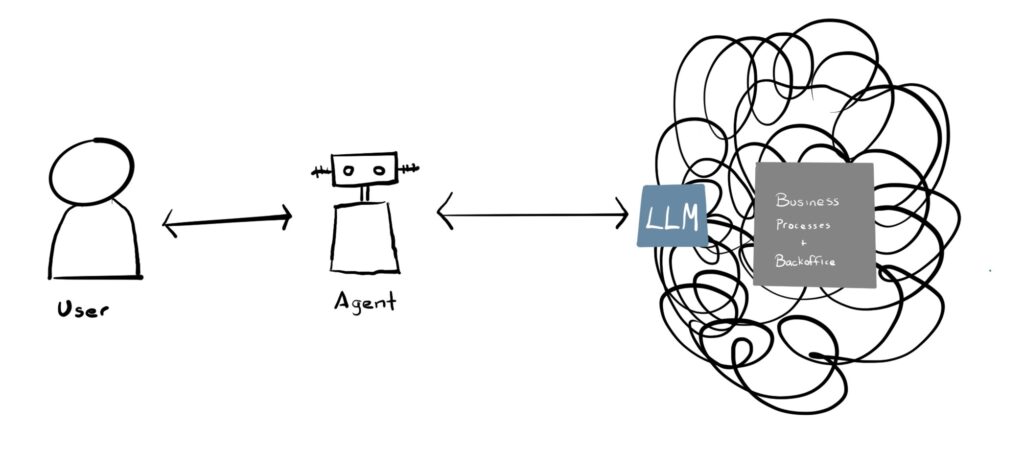

AI has only amplified that impulse. With tools that can instantly generate specs, synthesize feedback, and mock up features, product starts to look like a collection of tasks rather than a strategic function. And if it’s just tasks, why not let the machines do it?

That thinking is tempting, especially to companies chasing speed and efficiency. But it’s also shortsighted. Still, the “product is dead” narrative keeps getting airtime because companies want it to be true, even if it misses the bigger picture.

Speed Over Strategy: Engineering-Led Cultures Prefer Shipping

In many engineering-led cultures, especially in AI, there’s a deep bias toward building, shipping fast, testing fast, and iterating fast. AI has collapsed the cost of experimentation. And with today’s AI tools, it’s never been easier to vibe code (i.e., rapidly stitch together working demos using AI and low-code tools) your way to a working prototype. You can spin up UIs, connect APIs, and generate sample data in hours instead of weeks. It looks and feels like progress.

But without intention, you’re not building products, you’re building distractions. You’re producing, not progressing. You’re generating output, not outcomes.

And that’s the trap: it feels like you’re moving faster, but without a clear understanding of the customer, the problem, and the strategy, you’re either moving in circles or heading in the wrong direction entirely.

Task-Based Thinking: Why Product Looks Replaceable

The appeal is obvious: automate the “middle layer,” and suddenly, your team is leaner, faster, and cheaper. Product work is reframed as a series of repeatable tasks: write a story, generate a persona, summarize feedback, and stack rank a backlog. It’s presented as something mechanical, like configuring an assembly line, rather than requiring focus, intention, and insight.

But this framing is dangerously incomplete. These aren’t just tasks; they’re judgment calls. They ensure teams solve the right problems in the right way at the right time. Discovery without direction is noise. Strategy without prioritization is chaos. Specifications without insight are just empty documentation.

AI can assist with product work, but reducing it to a checklist makes it easier to sell a tool but harder to build anything meaningful.

A Convenient Story: The Simplified Narrative That Sells

It’s a narrative that promises clarity: eliminate the middle layer, remove the blockers, and let machines and makers do what they do best. This strategy plays perfectly in a world obsessed with efficiency and in organizations that already see product management as overhead.

But the truth is messier.

Good product managers don’t just write tickets or relay requests. They bring cohesion to chaos. They align teams around a shared understanding of the customer, the problem, and the goal. They ask the hard questions that AI can’t answer on its own.

Should we build this? Why now? What matters most?

AI can produce content, but not conviction. It can analyze feedback, but not frame a vision. And it can’t resolve the tensions between user needs, business goals, and technical constraints — at least not without someone to interpret, prioritize, and lead.

The “product is dead” story works because it feels simple. But building good products was never simple. Removing the people who deal with complexity doesn’t make it go away. It just makes it your customer’s problem.

The Companies Who Will Regret This

Here’s my prediction:

- The companies that cut product first will move fastest at first.

- Their roadmaps will fill up. Their launches will accelerate. Their demos will look impressive.

But then, slowly and quietly, things will start to break.

- Customer engagement will slip.

- Retention will fall.

- New features will feel disconnected from real needs.

- Teams will build for what’s easy, not for what’s valuable.

The companies that sold them those shiny new tools, the ones that promised to replace product? They’ll be long gone, moving on to the next buyer or looking for the next hype cycle to exploit.

Meanwhile, the companies that doubled down on the real craft of product, who invested in judgment, customer obsession, and asking why before what, will still be standing (and thriving) while others fade. They’ll have products that resonate and that evolve with their customers.

Because tools don’t create strategy.

People do.

Old Product Might Be Dead — And That’s a Good Thing

Now, here’s where I’ll agree with the AI evangelists: old product needed to change.

The days of PMs acting as backlog managers, Jira ticket writers, or meeting schedulers? Yeah, that should die.

PMs who only handed off requirements to engineering? Gone.

PMs who never talked to customers? Dead.

Let’s be honest: many organizations misdefined the PM role. They hired process managers and called it product. They built layers of communication, not layers of clarity. They were managing workflows, not products. They were pushing tickets, not pushing strategy. Those roles are vulnerable not because of AI, but because they weren’t doing product in the first place.

The version of product that survives this shift and is worth fighting for is sharper, faster, and more essential than ever. It’s not about being an intermediary between engineering and design. It’s about creating clarity, focus, and vision where there was once noise and confusion.

It looks like:

- Problem curation over solution obsession: It’s not about finding the quickest fix or building what’s easiest. It’s about understanding what problem we’re solving, for whom, and why it matters.

- Judgment over process: AI can help automate the steps, but it can’t tell you if you’re solving the right problem or if the timing is right. Good product management is still a series of thoughtful decisions, not just steps in a flowchart.

- Context over control: Dictating requirements from above doesn’t work anymore. Context, shared understanding, and alignment are what drive teams to collaborate effectively, not command and control.

- Collaboration over command: PMs are the glue that brings engineering, design, and business together. But that means being a partner and enabler, not a dictator. Collaboration is the new currency in product development.

- Customer truth over corporate theater: Building the right product requires honest feedback, real conversations with customers, and deep empathy. It’s not about making the product look good on paper; it’s about making it work for the people who use it.

The old way of doing product is over. But this isn’t about mourning its loss. It’s about embracing a new, more purposeful approach. The role of product management is evolving, and in many ways, that’s a huge opportunity to do better, build better, and have a bigger impact.

The King Is Dead. Long Live the King.

The “product is dead” narrative is loud right now because it’s easy. It’s easier to believe we can automate judgment than it is to build it. Easier to replace complexity than to wrestle with it. Easier to promise speed than to commit to substance.

But the companies that endure — the ones that create real value, not just hype-fueled demos — will be the ones that lean into the harder, more human work.

They’ll treat product not as a process to optimize, but as a practice to sharpen.

They’ll embrace AI as a powerful tool — not a replacement for the thinking, intuition, and collaboration that make great products possible.

They’ll stop treating product like a middle layer to cut, and start recognizing it as a critical function to elevate.

Because here’s the truth: the best product teams won’t just survive this shift. They’ll lead it.

They’ll be faster because they’re clearer. Smarter because they’re humbler. Stronger because they’re more aligned.

Product isn’t dead. Bad product is dead. Shallow product is dead. Performative product is dead.

The age of product isn’t over. The age of better product is just beginning.

Long live product — not as it was, but as it needs to be.